Deploying a Scalable E-commerce Platform with Kubernetes

Deployed a scalable e-commerce platform using Kubernetes on EKS, achieving zero-downtime updates, automated scaling, and secure microservice communication.

Project Details

Technologies Used

Deploying a Scalable E-commerce Platform with Kubernetes

Situation

Ecomm Solutions, a rapidly growing e-commerce platform, faced significant challenges with unpredictable traffic spikes during sales events, leading to performance bottlenecks and occasional downtime. The platform, comprising microservices like product catalog, user authentication, and payment processing, was hosted on a public cloud provider. However, the existing infrastructure struggled with:

- Unpredictable Traffic: Sales events caused latency and outages due to manual scaling.

- Inconsistent Deployments: Manual processes led to errors and prolonged release cycles.

- Security Concerns: Unrestricted pod communication posed risks of unauthorized access.

- Resource Inefficiencies: Over-provisioned resources increased costs during low-traffic periods.

- Data Persistence: The product catalog database required reliable storage for stateful operations.

To address these issues, Ecomm Solutions sought a Kubernetes-based solution to ensure high availability, automated scaling, secure communication, and optimized resource utilization.

Task

As DevOps engineers, our task was to design and implement a Kubernetes-based solution to:

- Deploy microservices with zero-downtime updates.

- Enable automatic scaling to handle traffic spikes.

- Secure inter-service communication with network policies.

- Ensure persistent storage for stateful services like the MongoDB database.

- Provide external access via a load balancer with proper routing.

The solution needed to leverage Infrastructure as Code (IaC) and integrate with CI/CD pipelines for operational efficiency.

Action

The DevOps team implemented a Kubernetes solution on Amazon EKS, using modern tools and best practices. Below are the detailed steps taken:

1. Cluster Setup and Namespace Isolation

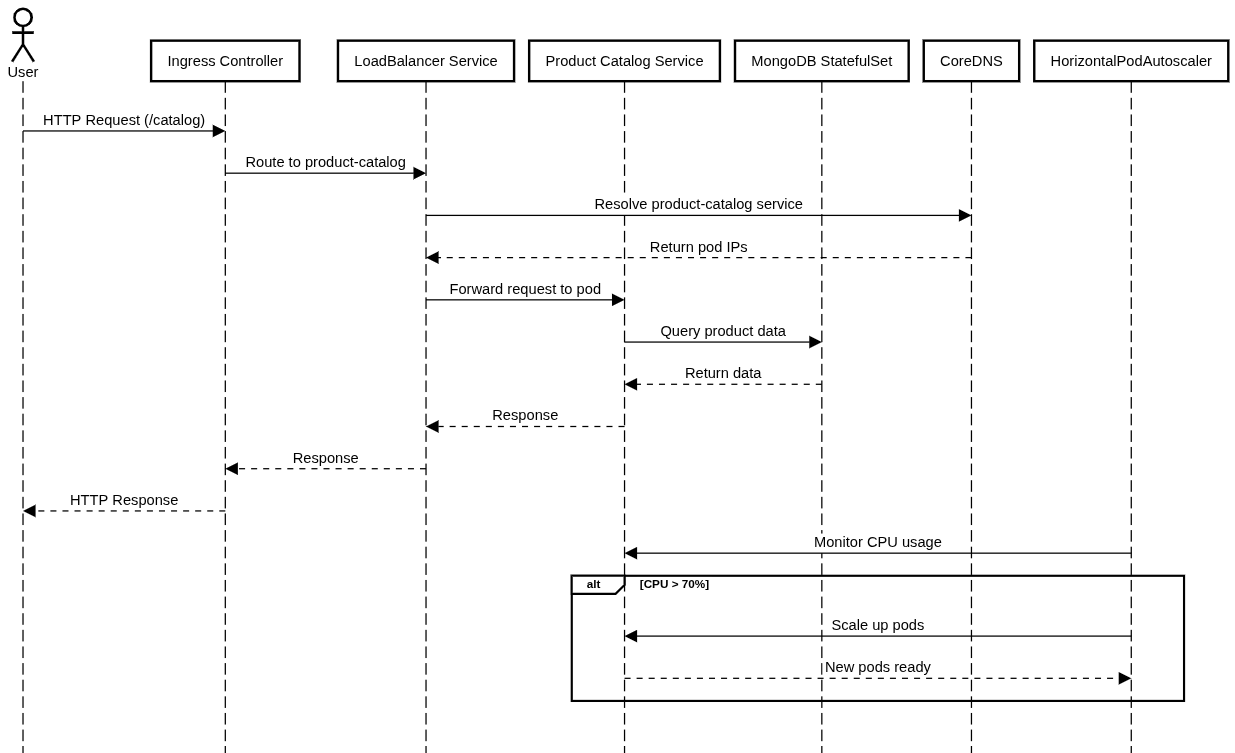

Namespaces provided logical separation for microservices, enhancing security and resource management. Amazon EKS was chosen for its scalability and AWS integration. We created an EKS cluster using Terraform for repeatable infrastructure, defined prod and staging namespaces with resource quotas to prevent resource hogging, and configured CoreDNS for service discovery.

For example, the namespace configuration was set as:

apiVersion: v1

kind: Namespace

metadata:

name: prod

---

apiVersion: v1

kind: ResourceQuota

metadata:

name: prod-quota

namespace: prod

spec:

hard:

cpu: "10"

memory: "20Gi"

pods: "50"

2. Deploying Microservices with Deployments and ReplicaSets

Deployments ensured high availability and zero-downtime updates for stateless microservices, while ReplicaSets maintained pod redundancy. We created Deployments for microservices like product-catalog with three replicas, used rolling updates with maxUnavailable: 0 and maxSurge: 1, and configured readiness and liveness probes for pod health. The configuration for the product-catalog Deployment was:

Example

apiVersion: apps/v1

kind: Deployment

metadata:

name: product-catalog

namespace: prod

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 0

maxSurge: 1

selector:

matchLabels:

app: product-catalog

template:

metadata:

labels:

app: product-catalog

spec:

containers:

- name: product-catalog

image: product-catalog:v1.0

resources:

limits:

cpu: "500m"

memory: "512Mi"

requests:

cpu: "200m"

memory: "256Mi"

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 15

periodSeconds: 20

3. Persistent Storage for Stateful Services

MongoDB required persistent storage for the product catalog, managed via StatefulSets for stable identities. We used a StatefulSet for MongoDB with dynamic Persistent Volume Claims (PVCs) via AWS EBS, configured a StorageClass for gp3 storage, and ensured unique pod identities for replica set configuration. The configuration was defined as:

Example

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: standard

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp3

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mongo

namespace: prod

spec:

serviceName: mongo

replicas: 2

selector:

matchLabels:

app: mongo

template:

metadata:

labels:

app: mongo

spec:

containers:

- name: mongo

image: mongo:4.4

volumeMounts:

- name: mongo-data

mountPath: /data/db

volumeClaimTemplates:

- metadata:

name: mongo-data

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: standard

resources:

requests:

storage: 10Gi

4. Service Discovery and Traffic Routing

Services provided stable internal communication, and Ingress optimized external access. We configured ClusterIP Services for microservices, used NGINX Ingress for URL-based routing, and integrated an AWS Load Balancer for public APIs with session affinity for consistent user sessions. The setup included:

- Defined ClusterIP Services for internal communication between microservices (e.g., product-catalog to authentication).

- Configured an Ingress resource with an NGINX Ingress Controller to route external traffic based on URL paths (e.g., /catalog to product-catalog service).

- Used LoadBalancer Services for public-facing APIs, leveraging the cloud provider’s load balancer.

- Enabled session affinity (sessionAffinity: ClientIP) for consistent user experiences.

Example

apiVersion: v1

kind: Service

metadata:

name: product-catalog

namespace: prod

spec:

selector:

app: product-catalog

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ecomm-ingress

namespace: prod

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx

rules:

- host: ecomm.example.com

http:

paths:

- path: /catalog

pathType: Prefix

backend:

service:

name: product-catalog

port:

number: 80

5. Network Policies for Security

Network Policies restricted unauthorized pod access, enhancing security. We installed Calico for network policy enforcement and defined policies to allow traffic only from product-catalog to mongo. An example policy was:

Example

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: mongo-access

namespace: prod

spec:

podSelector:

matchLabels:

app: mongo

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: product-catalog

ports:

- protocol: TCP

port: 27017

6. Autoscaling

Horizontal Pod Autoscaler (HPA) scaled pods to handle traffic spikes, optimizing performance and costs. We configured HPA for product-catalog based on 70% CPU usage and enabled cluster autoscaler for node scaling. The HPA configuration was:

Example

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: product-catalog-hpa

namespace: prod

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: product-catalog

minReplicas: 3

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

7. Secrets Management

Sensitive credentials for MongoDB were secured using Kubernetes Secrets, mounted as environment variables. The configuration included:

Example

aapiVersion: v1

kind: Secret

metadata:

name: mongo-credentials

namespace: prod

type: Opaque

data:

username: YWRtaW4= # "admin"

password: cGFzc3dvcmQ= # "password"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: product-catalog

namespace: prod

spec:

replicas: 1

selector:

matchLabels:

app: product-catalog

template:

metadata:

labels:

app: product-catalog

spec:

containers:

- name: product-catalog

image: your-image:latest

env:

- name: MONGO_USERNAME

valueFrom:

secretKeyRef:

name: mongo-credentials

key: username

- name: MONGO_PASSWORD

valueFrom:

secretKeyRef:

name: mongo-credentials

key: password

8. Monitoring and Health Checks

Liveness and readiness probes ensured pod health, and Prometheus with Grafana, deployed via Helm, provided performance monitoring. These were integrated into the Deployment configurations above.

Why: Liveness and readiness probes ensure pods are healthy and ready to serve traffic, preventing downtime due to unhealthy containers.

How:

- Configured liveness probes to restart pods if the

/healthendpoint fails. - Used readiness probes to ensure pods only receive traffic when ready.

- Integrated Prometheus and Grafana (via Helm charts) for monitoring pod metrics and visualizing performance.

9. CI/CD Integration

Why: Automating deployments reduces human error and ensures consistent updates.

How:

- Used a CI/CD pipeline (e.g., Jenkins or GitHub Actions) to build and push container images to a registry (e.g., Docker Hub).

- Utilized Helm charts to manage Kubernetes manifests, enabling templated deployments and rollbacks.

- Triggered rolling updates via kubectl apply or Helm upgrades after image updates.

Result:

- High Availability: Achieved zero-downtime deployments using rolling updates and maintained service availability with ReplicaSets ensuring three replicas per microservice.

- Scalability: HPA dynamically scaled pods to handle traffic spikes, reducing latency during sales events. Cluster autoscaler added nodes as needed, optimizing costs.

- Security: Network Policies restricted unauthorized access, and Secrets ensured secure credential management.

- Reliability: Persistent storage for MongoDB ensured data consistency. Health checks minimized downtime by restarting unhealthy pods.

- Cost Efficiency: Resource quotas and efficient pod scaling reduced over-provisioning, lowering cloud costs by 20% during normal operation.

- Developer Productivity: Helm charts and CI/CD integration streamlined deployments, reducing release cycles from days to hours.

This solution enabled Ecomm Solutions to support growth, handle traffic spikes, and maintain a secure, scalable platform.

Architectural Diagram

The architecture includes an EKS cluster with prod and staging namespaces, hosting microservices like product-catalog and MongoDB. External traffic routes through an AWS Load Balancer and NGINX Ingress, while Calico enforces network policies. Persistent storage uses AWS EBS, and monitoring is handled by Prometheus and Grafana. See the secondary image above for a visual representation.

Project Details

Technologies Used

Related Case Studies

AWS Load Balancer Controller - External DNS & Service for PeakPulse Retail

AMJ Cloud Technologies deployed External DNS with a Kubernetes LoadBalancer Service on EKS for PeakPulse Retail, enabling automated Route 53 DNS records for a secure e-commerce microservice.

Read Case Study

AWS Load Balancer Controller - External DNS & Ingress for ShopVibe Enterprises

AMJ Cloud Technologies deployed External DNS with the AWS Load Balancer Controller on EKS for ShopVibe Enterprises, enabling automated Route 53 DNS records and SSL-secured Ingress for e-commerce microservices.

Read Case Study

Enhancing a Kubernetes-Based Healthcare Data Processing Platform

Enhanced a healthcare data processing platform on GKE, achieving 99.95% uptime, HIPAA compliance, and 70% faster issue detection with optimized resources and observability.

Read Case Study